Goals

The goal of the workshop is to propose an agenda for interdisciplinary research that incorporates robust knowledge of societal, political and psychological models, which can help not only explain reactions to the tools that we build, but to design them to be useful and ethical.

Participants will be invited to:

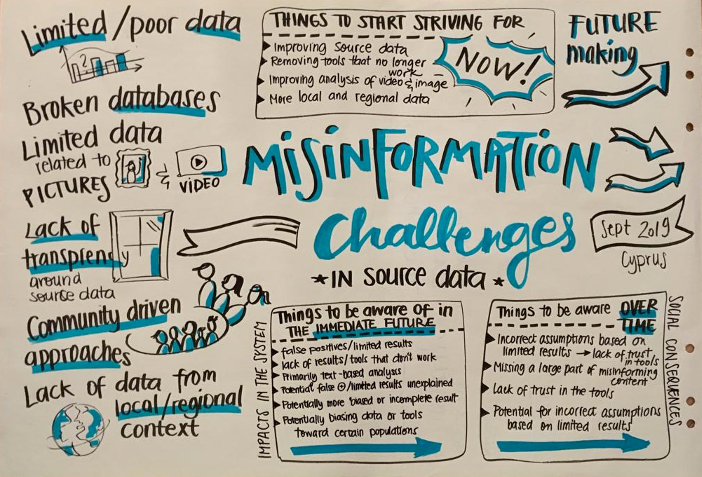

- Discuss challenges and obstacles related to misinformation from the human and socio-technical perspectives;

- Challenge existing approaches to tackle misinformation and identifying their limitations in socio-technical terms, including underlying assumptions, goals (e.g., preventing vs. correcting misinformation) and targeted users;

- Co-creating innovative future scenarios with socio-technical solutions.

Aiming for a small and diverse group of participants from different disciplines to foster interaction and exchange of ideas, the agenda proposes engaging activities that challenge the status-quo and promote creative-thinking towards effectively advancing the state-of-the-art. Participants will be encouraged to experience the workshop as an opportunity to initiate synergies.

Examples of challenges and obstacles related to source data

Call for Papers and Participation

We invite researchers and practitioners aiming at actively engaging with social, societal and ethical problems of current socio-technical solutions to tackle misinformation to join us at the CHI 2021 Workshop - Opinions, Intentions, Freedom of Expression, ... , and Other Human Aspects of Misinformation Online.

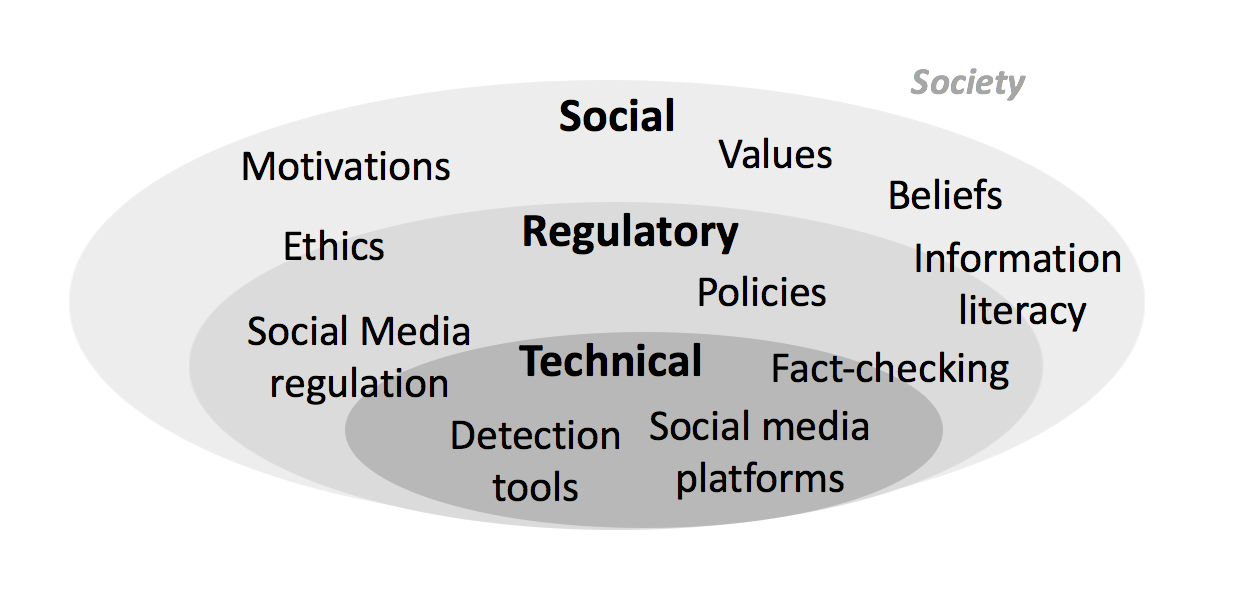

Information disorder online is a multilayered problem; there is not a single and comprehensive solution capable of stopping misinformation, and existing approaches are all limited for different reasons such as end-user engagement, inadequate interaction design, lack of explainability, etc. Purely technical solutions that disregard the social structures that lead to the spread of misinformation can be harmful by obfuscating the problem.

More comprehensive, ethical and impacting answers to the problem can only emerge through an interdisciplinary approach that includes computer scientists, social scientists and technology designers in the co-creation of features and delivery methods. Topics of interest include, but are not limited to:

- Censorship and freedom of speech

- Social and political polarisation, partisanship

- Disinformation campaigns and propaganda

- Conspiracy theories and rumours

- (Limitations of ) Automated tools for misinformation detection and notifications

- Nudging strategies and persuasion

- Social network analysis

- Impact on communities or social groups

- Fact-checking

- Explainable AI

- Credibility of online content

- Behavioural studies

- Human values

- Legal and ethical aspects of socio-technical solutions

Participants will actively engage in activities for:

- Identifying challenges and obstacles related to misinformation from human and socio-technical perspectives;

- Challenging existing approaches and identifying their limitations in socio-technical terms, including underlying assumptions, goals, and targeted users;

- Co-creating innovative future scenarios with socio-technical solutions addressing impact and ethical aspects.